Average Practice

I’ve talked about best practices a bunch of times. Generally, in praise of them, with the caveat that context is important. What was the best practice for one team, in one specific situation, might not be the best practice for you in your situation.

Over time, best practices get diluted. As they get described, and implemented, with less and less connection to the original context which they came out of, the less specific and focused they get. They become generic advice that applies everywhere. Also, by the time you hear about them, the team that came up with it has probably changed what they’re doing. Because their situation changed, the practices they follow has also changed to be optimized for that new situation.

Blindly following best practices doesn’t mean you’re doing what the best teams (whatever that actually means) are doing or that you’re going to get the same results. Think about it. Most documentation of best practices are so lacking in specifics that it’s easy to convince yourself that you’re already following them. And if they do have specifics, the specifics are so focused on the original situation that you can’t apply them in yours. What you end up with isn’t the best practice for you. It’s an average practice that is probably a very good idea.

Don’t get me wrong. I’m not saying you should ignore best practices. You shouldn’t ignore them. Instead, you should look closely at them. They may not tell you exactly what to do in order to get the same results as their originator, but they are usually providing a very good baseline. Instead of considering them a goal and something to strive for, think of them as defining a baseline that is pretty good, but could be adjusted and optimized to provide even better results for your specific implementation.

So don’t avoid best practices. The exact opposite in fact. Embrace them. Embrace them so hard you understand what problem they were created to solve. Then look at how they actually contributed to solving the problem, in the original context. Then look at how that context is the same as yours and how they differ. Then, and only then, should you start to think about how to apply a modified version of the best practice in your situation.

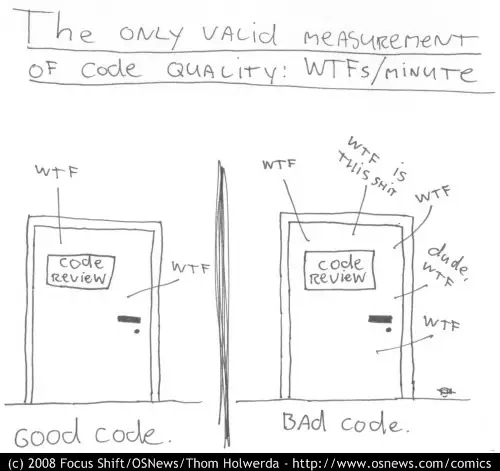

Consider the idea of code reviews. At some of the biggest tech firms, Microsoft, Google, Amazon, Meta, etc., there are bespoke tools for managing code reviews and ensuring that (almost) every change is reviewed by the right folks in a timely fashion. These companies all have billion-dollar market caps, and have huge profits. Therefore, if you want to have that kind of success, you need to build your own bespoke system to do the same, of even better, find the FOSS version of one of those systems and do exactly what they did, right?

Wrong. First, what problem was that system built to do? What were the incentives and counterincentives in the environment they were built in? Consider the Microsoft Windows team. 1000’s of developers, broken into larger and smaller orgs that need to work together. That need to share knowledge. That need to protect themselves from each other’s best intentions. They need to build 1000s of executables and libraries, in concert, quickly, and test them separately and together. The result is the Virtual Build Lab and all of the tooling and infrastructure around it. Microsoft has a large team of people who’s only job is to run the system that does builds of Windows. 100’s of folks who make sure the right changes go into the right libraries and executables and are then packaged up into some deployable thing that can be run through a set of automated tests, which are built and maintained by an equivalent team. So if you want to build and deploy something as ubiquitous as Windows, you need to do the same thing.

Or not. Do you have 1000’s of developers working on the same product? Do you have millions of lines of source code? Do you need to maintain backward compatibility with 20 year old software and hardware? Can you afford to dedicate 100 people to running your build system? Probably not, so why try?

Instead, look at what core business problems they’re trying to solve. Allow different teams to develop at their own pace. Make sure all teams have access to the latest code. Make sure builds don’t slow everyone down (too much). Decouple teams, but make sure the right people look at the right code before it gets into the system. Once you understand what they were trying to do, decide how much of that you care about.

Maybe it’s decoupling with proper oversight that is what you really want to solve. So solve that problem. At your scale. With the tools you already have at your disposal. Augment those tools as needed, but only with what’s needed. Something that assigns and requires specific reviewers based on the section of the codebase. Or maybe mob/ensemble programming solves the real problem, and you don’t even need to have additional code reviews after the code is written.

Remember, the goal is to solve the business problem and provide value, not to implement what someone else did. So next time someone tells you that you should do what