Indirection Vs. Abstraction

I’ve heard it said that there’s no problem in computer science that can’t be solved by another level of indirection. I’ve also heard that there’s no problem in computer science that can’t be solve by another level of abstraction. That’s the same thing, right? Wrong1.

Let’s start with some definitions.

Abstraction: In software engineering and computer science, abstraction is the process of generalizing concrete details, such as attributes … to focus attention on details of greater importance.

Indirection: In computer programming, an indirection (also called a reference) is a way of referring to something using a name, reference, or container instead of the value itself

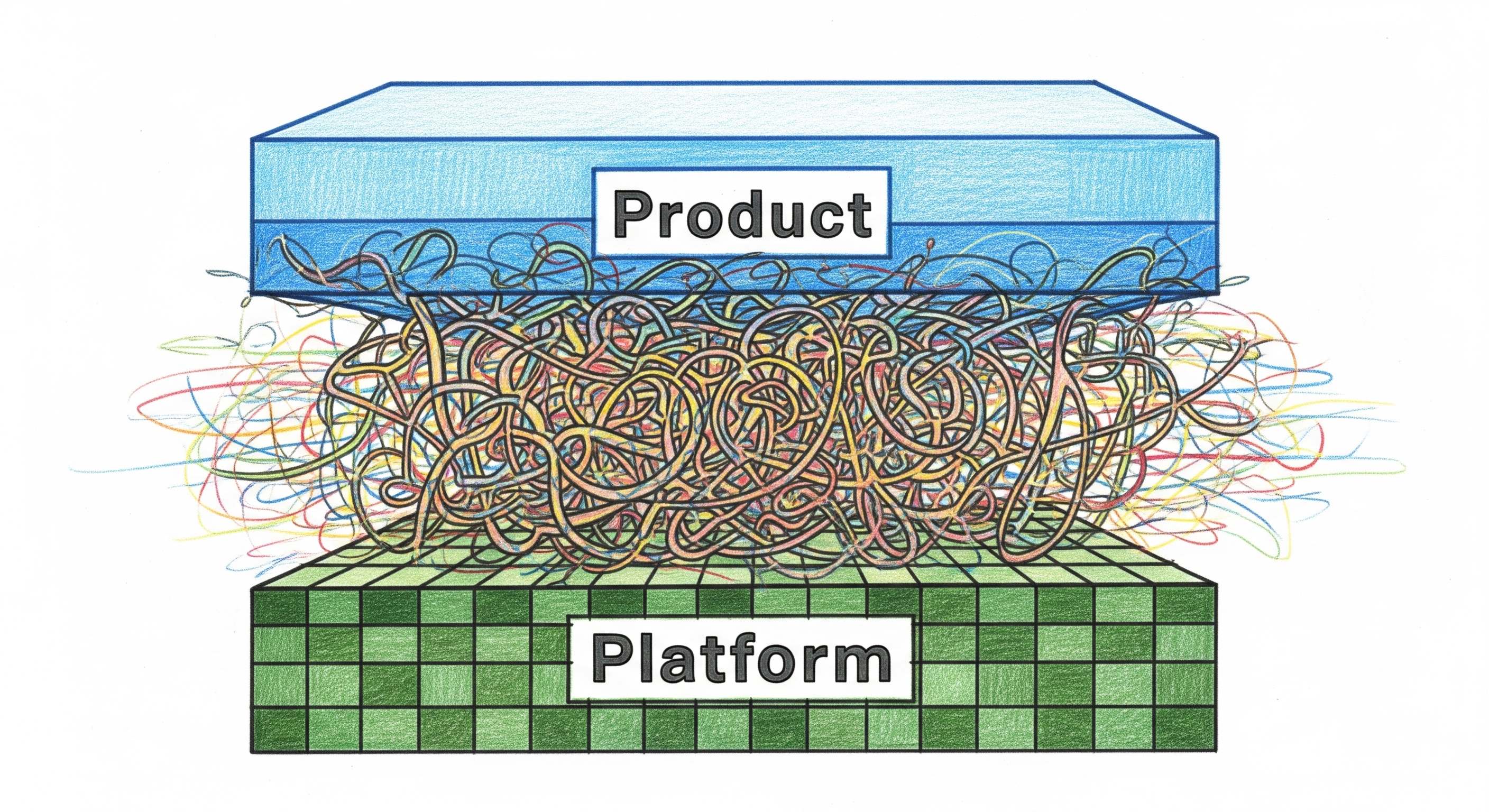

Here’s the thing. Abstractions provide simplicity by hiding details. Indirections provide flexibility by introducing a decision point. Both are important. You can’t write effective, resilient, maintainable software without both. It also means that every abstraction is also an indirection.

Consider dependency injection. It’s an indirection. The goal is to let the developer not care which thing got injected. The operations, the verbs if you will, are defined by the thing on the other side of the indirection. Instead of calling some resource directly, you get told what resource to use. That makes it more flexible. Regardless of what you get though, they need to act exactly the same. Whether you’re writing your data to the production database, a shared staging version, or your own private one, you know you’re writing to a database and the API you use reflects that. You’ve also made the overall system a bit more complex. And you’ve made it harder for the person looking at the code to know where the data was written because they have to figure out what was injected.

An abstraction, on the other hand, changes what you can do with the underlying thing. The goal is to change the mental model of the thing. Instead of the verbs coming directly from the underlying thing(s), the abstraction defines its own operations. Some things that could have been done are not exposed. Some things that can’t be done directly are composed and presented as operations. Instead of the CRUD operations on a database, you might have Load, Save, and Filter on an entire dataset. And as a user of the abstraction, you don’t know (or care) if it’s a database, a bunch of files on disk, or a mechanical turk setup. Outside the abstraction all you need to know is what the operations do. Inside the abstraction, you don’t care how it is used. You’ve reduced the cognitive load of the user, but also reduced the system’s flexibility.

Those two usages are clearly not the same. Yet I hear people using them interchangeably all the time. Not only does that cause communication problems, when people aren’t talking about the same thing, it causes problems in code as well. Particularly when a problem calls for an abstraction, but someone provides a solution that is really an indirection.

Therein lies the problem. If you confuse the two you make things worse. The most common mistake I’ve seen is using an indirection and thinking you’ve created an abstraction. For example, there’s the Enterprise FizzBuzz. That’s about as indirect as you can get, all in the name of abstraction.

On a personal level, I once spent over a year working on a Java project that really embraced Lombok. The theory was that there was an object model that did what we needed and everything was late-bound in. In practice, everything was decorators and builders. Indirection everywhere. Tracing the code was almost impossible. Extending a model required changing multiple files that had no visible connection. You didn’t have to connect anything to anything else. But you also didn’t know what you needed to create or modify until things didn’t work. It was a nightmare to work with because it tried to be an abstraction, but all it really was, was unneeded indirection.

I love abstractions. They lower cognitive load, help people do the right things easily, and make it hard to do the wrong thing. I like indirections as well. They provide flexibility and monitoring points. Just like a short link URL lets you move the actual link and not have anyone care, indirection lets you move things without users caring.

However, they are not the same thing, and if you confuse them you’ll eventually realize it. And pay the price.

-

It’s also not the same as the difference between an abstraction and an interface ↩︎