What Are You Testing? II

I’ve written about what you’re testing before. That article was about writing tests in a way that you could look at the test and understand what it was and wasn’t testing. It’s about writing readable and understandable code, and I stand by that article.

However, there are other ways to think about what you’re testing. The first, and most obvious way to think about it is thinking about what functionality the test is validating. Does the code do what you think it does? That’s pretty straightforward. You could think about it like I described in the article and how readable and understandable the test is. Or, you could think a little more abstractly, and think about what part of the development process the test was written I support of.

Taking inspiration from Agile Otter, you can think about the test at the meta level. What are you writing the test for? Is the test to help you write the code? Is the test to help you maintain the code? Is the test supposed to validate your assumptions about how users with use your code? Is the test supposed to characterize the performance of the code? Is the test supposed to help you understand how your code fails or what happens when some other part of the system fails? The reason you’re writing the test, the requirements you have for the test, help you write the test. It helps you know how to write the test and what successful result looks like.

A set of tests written to validate the internal functionality of a class, with knowledge of how the class operates has very different characteristics than a test written to validate the error handling when 25% of the network traffic times out or is otherwise lost. Tests written to validate the public interface of that class also look and feel different. They all have different runtime characteristics as well and are expected to be run at different times.

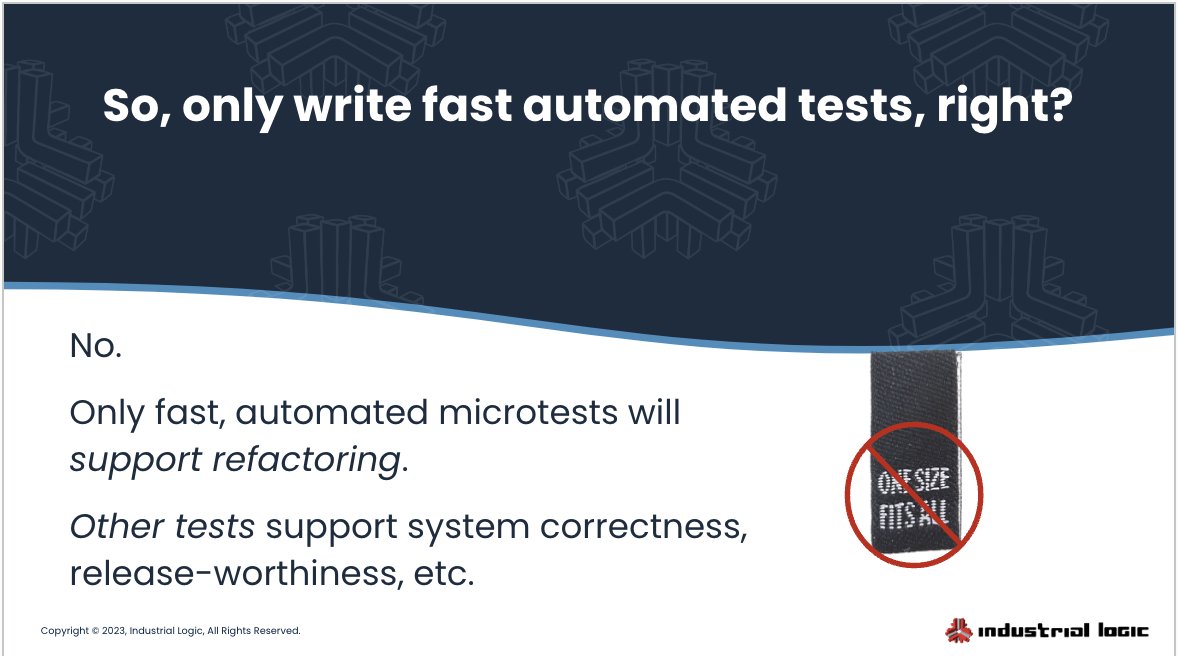

Knowing, understanding, and honoring those differences is key to writing good tests. Because the tests need to not only be correct in the sense that they test what they say they do by their name, but that the results of the test also accrue to the meta goal for the test. Integration and system level performance tests are great for testing how things will work as a whole, but they’re terrible for making sure you cover all of the possible branches in a utility class. Crafting a specific input to the entire system and then expecting that you can control exactly which branches get executed through a call chain of multiple microservices and database transactions is not going to work. You need unit tests and class functionality tests for that. The same thing if you need to do some refactoring. Testing a low level refactor by exercising the system is unlikely to test all of the cases and will probably take too long. On the other hand, if you have good unit tests for the public interface of a class, you can refactor to your heart’s content and feel confident that the system level results will be the same.

Conversely, testing system performance by measuring the time taken in a specific function is unlikely to help you. Unless you already know that most of the total time is taken in that function, assuming you know anything about system perf from looking at a single function is fooling yourself. Even if it’s a long-running function. Say you do some work on a transaction and take it from 100ms to 10ms. A 90% reduction in time. Sounds great. But if you only measure that execution time you don’t know the whole story. If the transaction only takes place 0.1% of the time, and part of that workflow involves getting a user’s response to a notification, saving 90ms is probably never going to be noticed.

So when you’re writing tests, don’t just remember what one thing you’re testing, also keep in mind why you’re testing it.