Testing Schedules

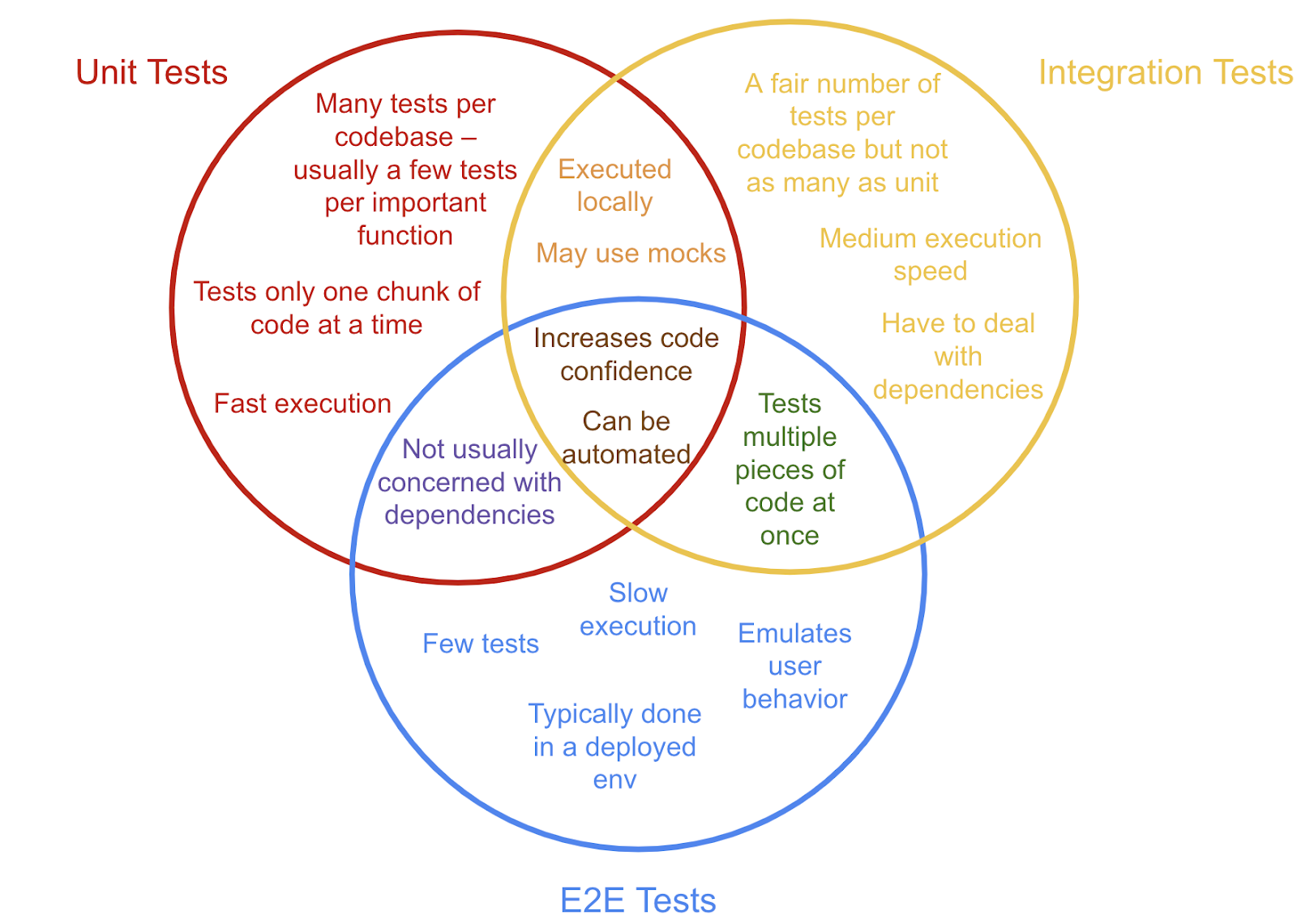

Yesterday I talked about different kinds of tests, unit, integration, and system. I mentioned that not only are there different kinds of tests, but those tests have different characteristics. In addition to the differences in what you can learn from tests by classification, there are also differences in execution times and execution costs.

NB: This is semi-orthogonal to test driven development. When you run the tests is not the same as when you write the tests.

These differences often lead to different schedules for running these tests. Like any dynamic system, one important tool to maintain stability is to have a short feedback loop. The faster the tests run, and the more often you run them, the shorter you can make your feedback loop. The shorter your feedback loop, the faster you can get to the result you want.

Luckily, unit tests are both cheap and fast. That means you can run them a lot. And get results quickly. The questions are, which ones do you run, and what does “a lot” mean? If you’ve got a small system, and running all the tests takes a few seconds, run them all. While building and running any individual test is fast, in a more realistic setting, building and running them all can take minutes or hours. And that’s just not practical. You need to do something else. This is where a dependency management system, like Make or bazel can help. You can set them up to only run the tests that are directly dependent on the code that changed. Combine that with some thoughtful code layout and you can relatively easily keep the time it takes to run the relevant (directly impacted) tests down.

Running quickly is important, but when do you run them? Recommendations vary from “every time a file is changed/saved” to every time changes are stored in your shared version control system. Personally, I think it’s every time there’s a logical change made locally. Sometimes logical changes span files, so testing based on any given file doesn’t make sense. You want to run the tests before you make a change to make sure the tests are still valid, you want to run the tests after each logical step in the overall change to make sure your changes haven’t broken anything, and you want to run the tests when you’re done to make sure that everything still works. That’s a good start, but it’s not enough. In a perfect world your unit tests would cover every possible combination of use cases for the SUT. But we don’t live in a perfect world, and as Hyrum’s Law tells us, someone, somewhere, is making use of some capability you don’t know you’ve exposed. A capability you don’t have a unit test for. So even when all your unit tests pass, you can still break something downstream. At some point you need to run all the unit tests for all the code that depends on the change. Ideally before anyone else sees the change. You run all those tests just before you push your changes to the shared version control system.

Unfortunately, unit tests aren’t enough. Everything can work properly on its own, but things also must work well together. That’s why we have integration tests in the first place. When do you run them? They cost more and take longer than integration tests, but the same basic rule applies. You should run them when there’s a complete logical change. That means when any component of any integration test changes, run the integration test. And again, just running the directly impacted integration tests isn’t enough. There will be integration tests that depend on the things that depend on the integrations you’re testing. You need to test them as well. Again, ideally before anyone else sees the change.

Then we have system level, or end-to-end tests. Those tests are almost always slow, expensive, and take real hardware. Every change impacts the system, but it’s just not practical to run them for every change. And even if you did, given the time it takes to run those tests (hours or days if there’s real hardware involved), running them for every change would slow you down so much you’d never get anything done. Of course, you need to run your system level tests for every release, or you don’t know what you’re shipping, but that’s not enough. You need to run the system tests, or at least the relevant system tests, often enough that you’ve got a fighting chance to figure out which change made the test fail. That’s dependent on the rate of change of your system. For systems under active development that might be every day or at least multiple times per week, for systems that rarely change, it might be much less frequently.

There you have it. Unit tests on changed code run locally on every logical change, before sharing, and centrally on everything impacted by the change after/as part of sharing with the rest of the team. Integration tests run on component level changes locally before sharing, and centrally on everything impacted by the change after/as part of sharing with the rest of the team. System level tests run on releases and on a schedule that makes sense based on the rate of change of the system.

Bonus points for allowing people to trigger system tests when they know there’s a high likelihood of emergent behavior to check for.