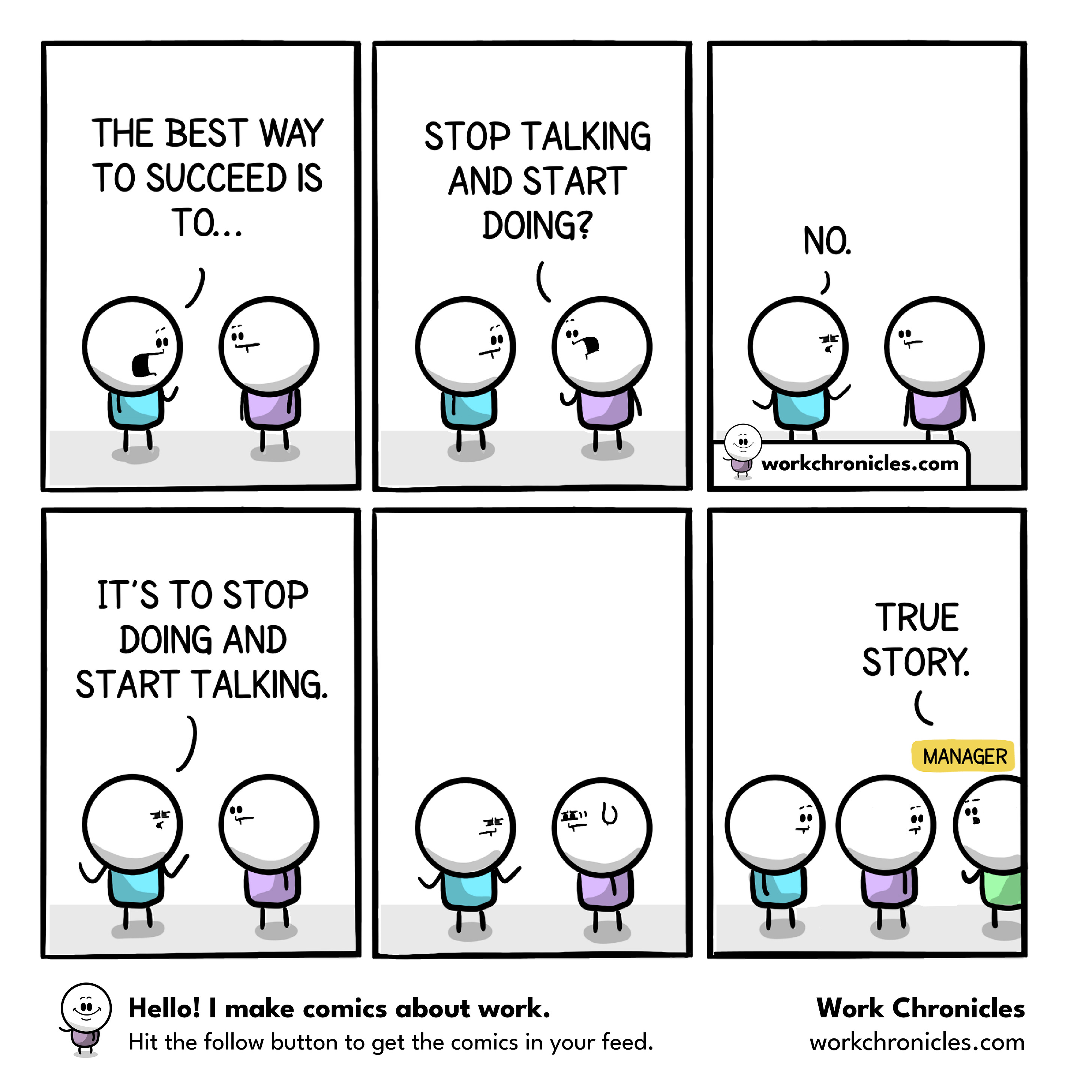

It's A Trap

Overheard:

… coming from another language and trying to find the library that makes it look like the language you came from is a trap

Pretty much all of the languages we write code in today are turing complete, meaning they can be used to write a program that does just about anything you want. That means all languages are equivalent, and the choice doesn’t matter, right?

Wrong

And it’s wrong on many levels. Consider this simple case. You’re going to spend a day or two, by yourself, writing a tool to do a job you need done now. Somehow, you know that once you finish writing it, you’re never going to touch it again. You won’t need to debug it later. You won’t need to understand it again later. You won’t need to update it later. No one else will ever see the code. Even in that case, your choice of language matters. As Larry Wall said,

Computer languages differ not so much in what they make possible, but in what they make easy.

That means your choice of languages is important even in the simplest situations.

Of course, we’re almost never in the simplest situation. You will need to debug the code. You will need to understand it later. You will need to update it. And you won’t be the only one who needs to do all those things. After all, your code needs to be readable and understandable not just by you when you’ve got all the context, but by someone else in the future without all the context. And it’s the future which influences the choice of language the most. The context that the code and the people that will be using/maintaining it will be operating in. If the tool you’re writing is going to be used in a front-end website and extend and connect with a large body of JavaScript, you should probably use JavaScript, not PHP.

Of course, choosing a language is only the first part. There’s always a tendency, especially when you’re learning the ins and outs of a new language, to just adjust to the new systax, but do things just like you’re used to doing them. After all, you can write code in just about any language that looks like the C code in the K & R book, and as I said, it might feel easier to write at the beginning.

Which gets back to that original thing I overheard. It’s a trap. Just because you can make one language look like another doesn’t mean you should. There are better choices for how to write things.

Languages have idioms. And ecosystems. When you write Python you should be pythonic. Code in Go should follow the Go Proverbs. C++ has its own set of idioms, and they’ve evolved over the years. Regardless of the language, you should understand and work with those idioms. The libraries, tools, and examples will be using those idioms. Working with them is going to be easier than trying to make it look like the last language you worked with. You should never be in a position where you’re working against the code or trying to coerce it into doing what you want. You never want to make things harder for yourself.

You should do it not just to make it easier to write, but because software development is a social activity. Working with those idioms will make things easier and clearer in the future. For people familiar with the language, it makes it reduces the cognitive load of reading it. Things make more sense, and you’re working with people’s expectations, not against them.

After all, you, and everyone else involved, are going to be living in the future, so have pity on future you.