Loops and Ratios

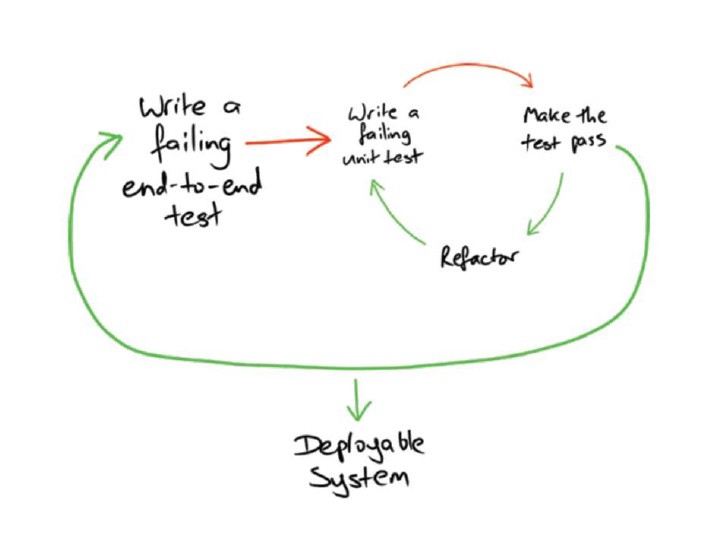

Test Driven Development, as I’ve said before, is really design by example. But it’s also a systems thinking approach to the problem. Loops withing loops. Feedback and feed forward. Change something (a requirement or a result) in one loop and it impacts the other.

What that image doesn’t capture though is the impact of time spent on various parts of the loop. It doesn’t touch on how the ratios of those times form incentives. And it really doesn’t say anything about how having the wrong ratios can lead to some pretty odd incentives. Which then leads to behaviors which are, shall we say, sub-optimal.

Since time isn’t part of the picture, a natural assumption is that each step takes the same amount of time, and the arrows don’t take any time. Of course, that’s not true. “Make the test pass” is where you spend the majority of the time, right? If that’s true then things work out the way you want them. The incentive is to make the tests pass and you don’t need to compensate for anything. Things move forward and people are happy.

We want continuous integration. We want commits that do one thing. We want reversible changes. We don’t want long lived branches. We don’t want big bang changes. Those lead to code divergence and merge hell. And no one wants that. When most of the time is spent in “Make the test pass” then incentives match and we’re naturally pushed in that direction.

Unfortunately, that distribution of time spent isn’t always the case. There are loops hidden inside each step. Those loops have their own time distribution. To make matters worse, the arrows are actually loops as well. Nothing is instant, and time gets spent in places somewhat outside your control. It’s that time, and the ratio of those times, that lead to bad incentives.

For instance, “Make the test pass” is really a loop of write code -> run tests -> identify issues. If the majority of time is in “write code”, great. But if it takes longer to run the tests than it does to write the code or identify issues then the incentive changes from make a small change and validate it to make a bunch of changes and validate them. This makes folks feel more efficient because less time is spent waiting. Unfortunately, it might make the individual more efficient, but it has all the downsides of bigger changes. More churn. More merge issues. Later deployment.

Consider the arrow to deployable system. It’s not just and arrow. That arrow is hiding at least two loops. The Create/Edit a PR/Commit -> Have it reviewed/tested -> Understand feedback and the Deploy to environment X -> Validate -> Fix. Those loops can take days. Depending on what’s involved in testing it can take weeks. If it takes weeks to get a commit deployed imagine how far out of sync everyone else is going to be when it gets deployed. Especially if you’ve saved some time by having multiple changes in that deployment.

All of those (and other) delays lead to bigger changes and more churn across the system. Churn leads to more issues. Incompatible changes. Bad merges. Regressions. All because the time ratios are misaligned. So before you think about dropping things from the workflow think about how you can save time. After all, chances are those steps in the workflow are there for a reason