WIP and Queuing Theory

You never know where things will back up.

I’ve talked about [flow] a few times now. It’s a great state to be in and you can be very productive. On the other hand, having too much WIP inhibits flow and slows you down. And it slows you down by more than the context switching time (although that is a big issue itself). A common refrain I hear though goes something like “I need to be working on so many things at the same time otherwise I’m sitting around doing nothing while I wait for someone else.”

On the surface that seems like a reasonable concern. After all, isn’t it more efficient to be doing something rather than not doing anything? As they say, it depends. It depends on how you’re measuring efficiency. As an individual, if you don’t wait then you’re clearly busier. Your utilization is up, and if you think utilization is the same thing as efficiency then yes, the individual efficiency is higher.

On the other hand, if you look at how much is getting finished (not started), you’ll see that staying busy will reduce how much gets finished, not increase it. It’s because of queuing theory. Instead of waiting for someone to finish their part of a task before you get to your part you start something else. Then, when the other person finishes their part the work sits idle while you finish whatever thing you just started. Since the other person is waiting for you to do your part they start something else. Eventually you get to that shared thing and do your part. But now the other person is busy doing something new, so they don’t get to until they finish. So instead of you originally waiting for someone else to finish, the work ends up waiting. Waiting at each transition. The more transitions the more delay you’ve added to the elapsed time. Everyone can do eavery task in the optimum amount of time, but you’ve still added lots of delay by having the work sit itdle.

Explaining dynamic things with text is hard. Luckily there are other options. Like this video by Michel Grootjans where he shows a bunch of simulations of how limiting WIP (and swarming) can dramatically improve throughput and reduce cycle time. Check it out. I’ll wait.

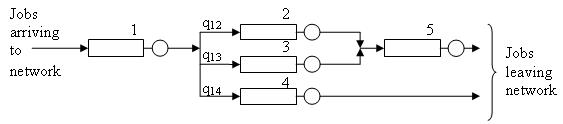

What really stands out is that the queues that appear between each phase in a task’s timeline are what causes the delays. With 3 tasks there are 2 queues. In this case there’s only one bottleneck, so only one queue ever got very deep, but you can imagine what would happen if there were more phases/transitions. Whenever a downstream phase takes longer than its predecessor the queue will grow. If there’s no limit then it ends up with most of the work in it. Adding a WIP limit doesn’t appreciably change total time since the queue just lets the work sit there, but it does reduce the cycle time for a given task. It spends much less time in a queue.

And that cycle time is the real win. Unless you’ve done a perfect job up front of defining the tasks, limiting WIP gives you the opportunity to learn from the work you’ve done. In Michel’s example, if you learned you needed to make a UX change to something you could do it before you’ve finished the UX. You’d still have the UX person around and they could incorporate those learnings into future tasks. You’ve actually eliminated a bunch of rework by simply not doing the work until you know exactly what it is.

Of course, that was a simple simulation where each task of a given type takes, on average, the same amount of time. In reality there’s probably more variance on task length than shown. It also assumes the length of time doesn’t depend on which worker gets the task. Again, not quite correct, but things average out.

Even with those caveats, the two big learnings are very apparent. Limit WIP and share the work. Eliminate the queues and reduce specialization and bottlenecks. Everyone will be happier and you can release something better sooner. Without doing more work. And being able to stay in flow.